update

This commit is contained in:

1

.gitignore

vendored

1

.gitignore

vendored

@@ -8,3 +8,4 @@ _freeze/

|

||||

*_cache/

|

||||

*_files/

|

||||

SD/_*/

|

||||

homework/

|

||||

|

||||

@@ -1 +1 @@

|

||||

454c267b732cda3ca58332ab3883bec6

|

||||

34a734e5b3d0f378041ea746f8654ddc

|

||||

|

||||

154

SD/2.2_dataimport/_demo.qmd

Normal file

154

SD/2.2_dataimport/_demo.qmd

Normal file

@@ -0,0 +1,154 @@

|

||||

---

|

||||

title: "Lesson 7"

|

||||

format: html

|

||||

---

|

||||

|

||||

|

||||

```{r}

|

||||

require(tidyverse)

|

||||

|

||||

|

||||

files <- c(

|

||||

"../../data/01-sales.csv",

|

||||

"../../data/02-sales.csv",

|

||||

"../../data/03-sales.csv"

|

||||

)

|

||||

|

||||

files <- dir("../../data", pattern = "sales.csv", full.names = TRUE)

|

||||

|

||||

|

||||

readr::read_csv(files, id = "file") |>

|

||||

mutate(file = basename(file))

|

||||

```

|

||||

|

||||

|

||||

```{r}

|

||||

require(tidyverse)

|

||||

library(tidyverse)

|

||||

|

||||

files <- dir(

|

||||

"../../data/gapminder",

|

||||

pattern = "^[12][09][0-9][0-9].xlsx$",

|

||||

full.names = TRUE

|

||||

)

|

||||

|

||||

|

||||

alldf <- tibble::tibble()

|

||||

for (file in files) {

|

||||

alldf <- alldf |>

|

||||

bind_rows(

|

||||

readxl::read_xlsx(file) |>

|

||||

mutate(year = parse_number(basename(file)))

|

||||

)

|

||||

}

|

||||

|

||||

alldf |>

|

||||

ggplot(aes(x = lifeExp, y = gdpPercap)) +

|

||||

geom_point(aes(color = factor(year))) +

|

||||

geom_smooth(method = "lm", se = FALSE) +

|

||||

scale_y_log10(

|

||||

breaks = scales::trans_breaks("log10", function(x) 10^x),

|

||||

labels = scales::trans_format("log10", scales::math_format(10^.x))

|

||||

) +

|

||||

facet_wrap(~year, ncol = 4, scale = "fixed")

|

||||

```

|

||||

|

||||

# slope

|

||||

|

||||

```{r}

|

||||

file <- files[1]

|

||||

|

||||

lm(y ~ x, data)

|

||||

|

||||

m <- lm(log10(gdpPercap) ~ lifeExp, readxl::read_xlsx(file))

|

||||

|

||||

summary(m)

|

||||

|

||||

coef(m)[2]

|

||||

|

||||

slopes <- c()

|

||||

years <- c()

|

||||

for (file in files) {

|

||||

m <- lm(log10(gdpPercap) ~ lifeExp, readxl::read_xlsx(file))

|

||||

years <- c(years, parse_number(basename(file)))

|

||||

slopes <- c(slopes, coef(m)[2])

|

||||

}

|

||||

years

|

||||

slopes

|

||||

|

||||

|

||||

plot(years, as.numeric(slopes), type = "b")

|

||||

```

|

||||

|

||||

# purrr

|

||||

|

||||

|

||||

|

||||

```{r}

|

||||

require(tidyverse)

|

||||

df <- tibble(

|

||||

filename = dir(

|

||||

"../../data/gapminder",

|

||||

pattern = "^[12][09][0-9][0-9].xlsx$",

|

||||

full.names = TRUE

|

||||

)

|

||||

) |>

|

||||

dplyr::mutate(

|

||||

data = purrr::map(

|

||||

filename,

|

||||

\(x) readxl::read_xlsx(x)

|

||||

)

|

||||

) |>

|

||||

mutate(year = parse_number(basename(filename))) |>

|

||||

mutate(

|

||||

m = purrr::map(data, \(xxxx) lm(log10(gdpPercap) ~ lifeExp, data = xxxx))

|

||||

) |>

|

||||

mutate(slope = purrr::map_dbl(m, \(yyyy) coef(yyyy)[2])) |>

|

||||

unnest(data)

|

||||

|

||||

pvalue <- summary(df$m[[1]])$coefficients[2, 4]

|

||||

rsq <- summary(df$m[[1]])$r.squared

|

||||

|

||||

require(tidymodels)

|

||||

generics::tidy(df$m[[1]])

|

||||

|

||||

df |>

|

||||

ggplot(aes(x = lifeExp, y = gdpPercap)) +

|

||||

geom_point(aes(color = factor(year))) +

|

||||

geom_smooth(method = "lm", se = FALSE) +

|

||||

scale_y_log10(

|

||||

breaks = scales::trans_breaks("log10", function(x) 10^x),

|

||||

labels = scales::trans_format("log10", scales::math_format(10^.x))

|

||||

) +

|

||||

facet_wrap(~year, ncol = 4, scale = "fixed")

|

||||

|

||||

|

||||

df |>

|

||||

ggplot(aes(x = year, y = slope)) +

|

||||

geom_line() +

|

||||

geom_point()

|

||||

|

||||

|

||||

df

|

||||

df$slope[[1]]

|

||||

|

||||

coef(df$m[[1]])[2]

|

||||

|

||||

lm(y ~ x, data)

|

||||

|

||||

|

||||

df |>

|

||||

unnest(data) |>

|

||||

ggplot(aes(x = lifeExp, y = gdpPercap)) +

|

||||

geom_point(aes(color = factor(year))) +

|

||||

geom_smooth(method = "lm", se = FALSE) +

|

||||

scale_y_log10(

|

||||

breaks = scales::trans_breaks("log10", function(x) 10^x),

|

||||

labels = scales::trans_format("log10", scales::math_format(10^.x))

|

||||

) +

|

||||

facet_wrap(~year, ncol = 4, scale = "fixed")

|

||||

```

|

||||

|

||||

|

||||

|

||||

|

||||

@@ -197,9 +197,11 @@ annoying <- tibble(

|

||||

```{r}

|

||||

#| message: false

|

||||

|

||||

sales_files <- c("../../data/01-sales.csv",

|

||||

sales_files <- c(

|

||||

"../../data/01-sales.csv",

|

||||

"../../data/02-sales.csv",

|

||||

"../../data/03-sales.csv")

|

||||

"../../data/03-sales.csv"

|

||||

)

|

||||

read_csv(sales_files, id = "file")

|

||||

```

|

||||

|

||||

@@ -223,8 +225,11 @@ read_csv(sales_files, id = "file")

|

||||

## 批量读取

|

||||

|

||||

```{r}

|

||||

sales_files <- list.files("../../data",

|

||||

pattern = "sales\\.csv$", full.names = TRUE)

|

||||

sales_files <- list.files(

|

||||

"../../data",

|

||||

pattern = "sales\\.csv$",

|

||||

full.names = TRUE

|

||||

)

|

||||

sales_files

|

||||

```

|

||||

|

||||

@@ -283,8 +288,13 @@ metadf <- readxl::read_xlsx("../../data/airquality.xlsx")

|

||||

dir.create("../../data/metacity2/")

|

||||

metadf |>

|

||||

nest(sitedf = -site) |>

|

||||

mutate(flag = purrr::map2(site, sitedf,

|

||||

~ writexl::write_xlsx(.y, paste0("../../data/metacity2/", .x, ".xlsx"))))

|

||||

mutate(

|

||||

flag = purrr::map2(

|

||||

site,

|

||||

sitedf,

|

||||

~ writexl::write_xlsx(.y, paste0("../../data/metacity2/", .x, ".xlsx"))

|

||||

)

|

||||

)

|

||||

```

|

||||

|

||||

|

||||

@@ -305,10 +315,16 @@ if (FALSE) {

|

||||

writexl::write_xlsx(metanestdf$citydf, path = "../../data/meta_city.xlsx")

|

||||

dir.create("../../data/metacity/")

|

||||

metanestdf |>

|

||||

mutate(flag = purrr::map2(Area, citydf,

|

||||

~ writexl::write_xlsx(.y,

|

||||

mutate(

|

||||

flag = purrr::map2(

|

||||

Area,

|

||||

citydf,

|

||||

~ writexl::write_xlsx(

|

||||

.y,

|

||||

path = paste0("../../data/metacity/", .x, ".xlsx")

|

||||

)))

|

||||

)

|

||||

)

|

||||

)

|

||||

}

|

||||

```

|

||||

|

||||

|

||||

115

SD/2.3_datatransform/_demo.qmd

Normal file

115

SD/2.3_datatransform/_demo.qmd

Normal file

@@ -0,0 +1,115 @@

|

||||

---

|

||||

title: "Lesson 8"

|

||||

format: html

|

||||

---

|

||||

|

||||

|

||||

```{r}

|

||||

require(tidyverse)

|

||||

library(nycflights13)

|

||||

|

||||

flights |>

|

||||

select(3:6) |>

|

||||

head(3) |>

|

||||

rename_all(~ gsub("_", "", .))

|

||||

|

||||

|

||||

flights |>

|

||||

select(3:6) |>

|

||||

head(3) |>

|

||||

rename_with(toupper, .cols = 2:4)

|

||||

|

||||

# 每月10号-15号,dep_delay > 100 的航班

|

||||

|

||||

# 每月哪个出发地origin的 dep_delay总时长最长

|

||||

|

||||

flights |>

|

||||

group_by(month, origin) |>

|

||||

summarize(n = n(), total_dep_delay = mean(dep_delay, na.rm = TRUE)) |>

|

||||

slice_max(total_dep_delay)

|

||||

|

||||

# arrange(month, origin, desc(total_dep_delay))

|

||||

|

||||

# 每月随机抽取一天,随机抽取三个航班

|

||||

|

||||

slice_sample(n = 1)

|

||||

|

||||

flights |>

|

||||

tidyr::nest(ymddf = -c(year, month, day)) |>

|

||||

group_by(year, month) |>

|

||||

slice_sample(n = 5) |>

|

||||

unnest(ymddf) |>

|

||||

group_by(year, month, day) |>

|

||||

slice_sample(n = 3)

|

||||

|

||||

|

||||

flights |>

|

||||

tidyr::nest(ymddf = -c(year, month, day)) |>

|

||||

group_by(year, month) |>

|

||||

slice_sample(n = 1) |>

|

||||

mutate(

|

||||

ymddf = purrr::map(ymddf, \(x) {

|

||||

x |>

|

||||

slice_sample(n = 3)

|

||||

})

|

||||

)

|

||||

|

||||

# 每月 每个出发地 周末的平均dep_delay 与 工作日的平均dep_delay 差值最大的3个航班

|

||||

|

||||

flights |>

|

||||

mutate(date = ymd(paste(year, month, day))) |>

|

||||

mutate(weekday = wday(date)) |>

|

||||

mutate(isworkday = if_else(between(weekday, 2, 6), "Yes", "No")) |>

|

||||

group_by(year, month, origin, flight, isworkday) |>

|

||||

summarize(mean_delay = mean(dep_delay, na.rm = TRUE)) |>

|

||||

tidyr::nest(diffdelaydf = c(isworkday, mean_delay)) |>

|

||||

filter(

|

||||

purrr::map(diffdelaydf, \(x) {

|

||||

nrow(x)

|

||||

}) >

|

||||

1

|

||||

) |>

|

||||

mutate(

|

||||

diffdelay = purrr::map_dbl(diffdelaydf, \(x) {

|

||||

x |>

|

||||

arrange(isworkday) |>

|

||||

pull(mean_delay) |>

|

||||

diff()

|

||||

})

|

||||

) |>

|

||||

group_by(year, month, origin) |>

|

||||

slice_max(diffdelay, n = 3)

|

||||

|

||||

|

||||

wday(today())

|

||||

|

||||

weekday()

|

||||

|

||||

|

||||

slice_sample(n = 1)

|

||||

|

||||

|

||||

flights |>

|

||||

group_by(month) |>

|

||||

slice_sample(n = 1)

|

||||

```

|

||||

|

||||

|

||||

|

||||

```{r}

|

||||

p1 <- flights |>

|

||||

group_by(year, month) |>

|

||||

summarize(n = n()) |>

|

||||

ggplot(aes(month, n)) +

|

||||

geom_point(shape = 21, size = 6, color = "black", fill = "red") +

|

||||

geom_line()

|

||||

|

||||

ggsave("./a.pdf")

|

||||

ggsave("./a.png")

|

||||

|

||||

require(patchwork)

|

||||

|

||||

p1 / p1

|

||||

```

|

||||

|

||||

|

||||

BIN

SD/2.3_datatransform/a.pdf

Normal file

BIN

SD/2.3_datatransform/a.pdf

Normal file

Binary file not shown.

BIN

SD/2.3_datatransform/a.png

Normal file

BIN

SD/2.3_datatransform/a.png

Normal file

Binary file not shown.

|

After Width: | Height: | Size: 271 KiB |

@@ -14,8 +14,10 @@ format: html

|

||||

# 下载至临时文件

|

||||

if (FALSE) {

|

||||

tmpxlsxpath <- file.path(tempdir(), "airquality.xlsx")

|

||||

download.file("https://git.drwater.net/course/RWEP/raw/branch/PUB/data/airquality.xlsx",

|

||||

destfile = tmpxlsxpath)

|

||||

download.file(

|

||||

"https://git.drwater.net/course/RWEP/raw/branch/PUB/data/airquality.xlsx",

|

||||

destfile = tmpxlsxpath

|

||||

)

|

||||

airqualitydf <- readxl::read_xlsx(tmpxlsxpath, sheet = 2)

|

||||

metadf <- readxl::read_xlsx(tmpxlsxpath, sheet = 1)

|

||||

saveRDS(airqualitydf, "./airqualitydf.RDS")

|

||||

@@ -41,7 +43,12 @@ airqualitydf |>

|

||||

left_join(metadf |> select(site, city = Area)) |>

|

||||

group_by(city) |>

|

||||

filter(n() > 5) |>

|

||||

summarize(p = sum(AQI.median < quantile(airqualitydf$AQI, probs = 0.5, na.rm = TRUE)) / n()) |>

|

||||

summarize(

|

||||

p = sum(

|

||||

AQI.median < quantile(airqualitydf$AQI, probs = 0.5, na.rm = TRUE)

|

||||

) /

|

||||

n()

|

||||

) |>

|

||||

top_n(10, p)

|

||||

|

||||

|

||||

@@ -57,12 +64,11 @@ airqualitydf |>

|

||||

left_join(metadf |> select(site, city = Area)) |>

|

||||

group_by(city) |>

|

||||

filter(length(unique(site)) >= 5) |>

|

||||

summarize(p = sum(AQI < quantile(airqualitydf$AQI, probs = 0.2,

|

||||

na.rm = TRUE)) / n()) |>

|

||||

summarize(

|

||||

p = sum(AQI < quantile(airqualitydf$AQI, probs = 0.2, na.rm = TRUE)) / n()

|

||||

) |>

|

||||

slice_max(p, n = 10) |>

|

||||

knitr::kable()

|

||||

|

||||

|

||||

```

|

||||

|

||||

|

||||

@@ -83,43 +89,58 @@ if (FALSE) {

|

||||

left_join(metadf |> select(site, city = Area)) |>

|

||||

group_by(city) |>

|

||||

filter(length(unique(site)) >= 5) |>

|

||||

mutate(dayornight = factor(ifelse(between(hour(datetime), 8, 20), "day", "night"),

|

||||

levels = c("day", "night"))

|

||||

mutate(

|

||||

dayornight = factor(

|

||||

ifelse(between(hour(datetime), 8, 20), "day", "night"),

|

||||

levels = c("day", "night")

|

||||

)

|

||||

) |>

|

||||

group_by(city) |>

|

||||

nest(citydf = -city) |>

|

||||

mutate(median_diff = purrr::map_dbl(citydf, ~

|

||||

.x |>

|

||||

mutate(

|

||||

median_diff = purrr::map_dbl(

|

||||

citydf,

|

||||

~ .x |>

|

||||

specify(AQI ~ dayornight) |>

|

||||

calculate(stat = "diff in medians", order = c("day", "night")) |>

|

||||

pull(stat)

|

||||

)) |>

|

||||

)

|

||||

) |>

|

||||

ungroup() |>

|

||||

# slice_sample(n = 12) |>

|

||||

mutate(null_dist = purrr::map(citydf, ~

|

||||

.x |>

|

||||

mutate(

|

||||

null_dist = purrr::map(

|

||||

citydf,

|

||||

~ .x |>

|

||||

specify(AQI ~ dayornight) |>

|

||||

hypothesize(null = "independence") |>

|

||||

generate(reps = 1000, type = "permute") |>

|

||||

calculate(stat = "diff in medians", order = c("day", "night"))

|

||||

)) |>

|

||||

mutate(p_value = purrr::map2_dbl(null_dist, median_diff,

|

||||

)

|

||||

) |>

|

||||

mutate(

|

||||

p_value = purrr::map2_dbl(

|

||||

null_dist,

|

||||

median_diff,

|

||||

~ get_p_value(.x, obs_stat = .y, direction = "both") |>

|

||||

pull(p_value)

|

||||

)) |>

|

||||

)

|

||||

) |>

|

||||

mutate(sigdiff = ifelse(p_value < 0.01, "显著差异", "无显著差异")) |>

|

||||

mutate(fig = purrr::pmap(list(null_dist, median_diff, city, sigdiff),

|

||||

mutate(

|

||||

fig = purrr::pmap(

|

||||

list(null_dist, median_diff, city, sigdiff),

|

||||

~ visualize(..1) +

|

||||

shade_p_value(obs_stat = ..2, direction = "both") +

|

||||

ggtitle(paste0(..3, ":", ..4)) +

|

||||

theme_sci(2, 2)

|

||||

)) |>

|

||||

)

|

||||

) |>

|

||||

arrange(p_value)

|

||||

saveRDS(testdf, "./testdf.RDS")

|

||||

}

|

||||

|

||||

if (FALSE) {

|

||||

|

||||

lang <- "cn"

|

||||

require(dwfun)

|

||||

require(rmdify)

|

||||

@@ -138,15 +159,16 @@ testdf |>

|

||||

nest(grpdf = -grp) |>

|

||||

ungroup() |>

|

||||

# slice(1) |>

|

||||

mutate(gp = purrr::map(grpdf,

|

||||

mutate(

|

||||

gp = purrr::map(

|

||||

grpdf,

|

||||

~ (.x |>

|

||||

pull(fig)) |>

|

||||

patchwork::wrap_plots(ncol = 3) +

|

||||

dwfun::theme_sci(5, 7))) |>

|

||||

dwfun::theme_sci(5, 7)

|

||||

)

|

||||

) |>

|

||||

pull(gp)

|

||||

|

||||

|

||||

}

|

||||

|

||||

```

|

||||

|

||||

|

||||

254

SD/5.1_model/_demo.qmd

Normal file

254

SD/5.1_model/_demo.qmd

Normal file

@@ -0,0 +1,254 @@

|

||||

---

|

||||

title: "Lesson 9"

|

||||

format: html

|

||||

---

|

||||

|

||||

|

||||

```{r}

|

||||

# install.packages("tidymodels")

|

||||

|

||||

require(tidymodels)

|

||||

taxi

|

||||

|

||||

taxisplit <- initial_split(taxi, prop = 0.8)

|

||||

taxi_train <- training(taxisplit)

|

||||

taxi_test <- testing(taxisplit)

|

||||

|

||||

tree_spec <-

|

||||

decision_tree(cost_complexity = 0.002) %>%

|

||||

set_mode("classification")

|

||||

|

||||

taxi_fit <- workflow() %>%

|

||||

add_formula(tip ~ .) %>%

|

||||

add_model(tree_spec) %>%

|

||||

fit(data = taxi_train)

|

||||

```

|

||||

|

||||

|

||||

```{r}

|

||||

augment(taxi_fit, new_data = taxi_train) %>%

|

||||

relocate(tip, .pred_class, .pred_yes, .pred_no)

|

||||

|

||||

augment(taxi_fit, new_data = taxi_train) %>%

|

||||

conf_mat(truth = tip, estimate = .pred_class)

|

||||

|

||||

augment(taxi_fit, new_data = taxi_train) %>%

|

||||

accuracy(truth = tip, estimate = .pred_class)

|

||||

|

||||

augment(taxi_fit, new_data = taxi_train) %>%

|

||||

sensitivity(truth = tip, estimate = .pred_class)

|

||||

|

||||

augment(taxi_fit, new_data = taxi_train) %>%

|

||||

specificity(truth = tip, estimate = .pred_class)

|

||||

|

||||

taxi_metrics <- metric_set(accuracy, specificity, sensitivity)

|

||||

|

||||

augment(taxi_fit, new_data = taxi_train) %>%

|

||||

taxi_metrics(truth = tip, estimate = .pred_class)

|

||||

|

||||

taxi_metrics <- metric_set(accuracy, specificity, sensitivity)

|

||||

|

||||

augment(taxi_fit, new_data = taxi_train) %>%

|

||||

group_by(local) %>%

|

||||

taxi_metrics(truth = tip, estimate = .pred_class)

|

||||

|

||||

augment(taxi_fit, new_data = taxi_train) %>%

|

||||

roc_curve(truth = tip, .pred_yes) %>%

|

||||

autoplot()

|

||||

|

||||

|

||||

augment(taxi_fit, new_data = taxi_train)

|

||||

|

||||

|

||||

augment(taxi_fit, new_data = taxi_train) %>%

|

||||

roc_curve(truth = tip, .pred_yes) |>

|

||||

ggplot(aes(1 - sensitivity, specificity)) +

|

||||

geom_point() +

|

||||

geom_line() +

|

||||

geom_abline(slope = 1)

|

||||

```

|

||||

|

||||

|

||||

# Cross Validation

|

||||

|

||||

```{r}

|

||||

vfold_cv(taxi_train, v = 10) |>

|

||||

pull(splits) |>

|

||||

nth(1)

|

||||

taxi_folds <- vfold_cv(taxi_train)

|

||||

taxi_folds$splits[1:3]

|

||||

|

||||

vfold_cv(taxi_train, strata = tip)

|

||||

|

||||

set.seed(123)

|

||||

taxi_folds <- vfold_cv(taxi_train, v = 10, strata = tip)

|

||||

taxi_folds

|

||||

|

||||

taxi_wflow <- workflow() %>%

|

||||

add_formula(tip ~ .) %>%

|

||||

add_model(tree_spec)

|

||||

|

||||

|

||||

taxi_res <- fit_resamples(taxi_wflow, taxi_folds)

|

||||

taxi_res

|

||||

|

||||

|

||||

taxi_res$.metrics[[1]]

|

||||

|

||||

|

||||

taxi_res$splits[[1]]

|

||||

|

||||

analysis(taxi_res$splits[[1]])

|

||||

|

||||

analysis(taxi_res$splits[[1]])

|

||||

assessment(taxi_res$splits[[1]])

|

||||

|

||||

|

||||

taxi_res %>%

|

||||

collect_metrics()

|

||||

|

||||

taxi_res %>%

|

||||

collect_metrics() %>%

|

||||

select(.metric, mean, n)

|

||||

|

||||

# Save the assessment set results

|

||||

ctrl_taxi <- control_resamples(save_pred = TRUE)

|

||||

taxi_res <- fit_resamples(taxi_wflow, taxi_folds, control = ctrl_taxi)

|

||||

|

||||

taxi_res

|

||||

```

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

# NLA2007 cyanophyta model

|

||||

|

||||

|

||||

```{r}

|

||||

require(tidyverse)

|

||||

sitedf <- readr::read_csv(

|

||||

"https://www.epa.gov/sites/default/files/2014-01/nla2007_sampledlakeinformation_20091113.csv"

|

||||

) |>

|

||||

select(

|

||||

SITE_ID,

|

||||

lon = LON_DD,

|

||||

lat = LAT_DD,

|

||||

name = LAKENAME,

|

||||

area = LAKEAREA,

|

||||

zmax = DEPTHMAX

|

||||

) |>

|

||||

group_by(SITE_ID) |>

|

||||

summarize(

|

||||

lon = mean(lon, na.rm = TRUE),

|

||||

lat = mean(lat, na.rm = TRUE),

|

||||

name = unique(name),

|

||||

area = mean(area, na.rm = TRUE),

|

||||

zmax = mean(zmax, na.rm = TRUE)

|

||||

)

|

||||

|

||||

|

||||

visitdf <- readr::read_csv(

|

||||

"https://www.epa.gov/sites/default/files/2013-09/nla2007_profile_20091008.csv"

|

||||

) |>

|

||||

select(SITE_ID, date = DATE_PROFILE, year = YEAR, visit = VISIT_NO) |>

|

||||

distinct()

|

||||

|

||||

|

||||

waterchemdf <- readr::read_csv(

|

||||

"https://www.epa.gov/sites/default/files/2013-09/nla2007_profile_20091008.csv"

|

||||

) |>

|

||||

select(

|

||||

SITE_ID,

|

||||

date = DATE_PROFILE,

|

||||

depth = DEPTH,

|

||||

temp = TEMP_FIELD,

|

||||

do = DO_FIELD,

|

||||

ph = PH_FIELD,

|

||||

cond = COND_FIELD,

|

||||

)

|

||||

|

||||

sddf <- readr::read_csv(

|

||||

"https://www.epa.gov/sites/default/files/2014-10/nla2007_secchi_20091008.csv"

|

||||

) |>

|

||||

select(

|

||||

SITE_ID,

|

||||

date = DATE_SECCHI,

|

||||

sd = SECMEAN,

|

||||

clear_to_bottom = CLEAR_TO_BOTTOM

|

||||

)

|

||||

|

||||

trophicdf <- readr::read_csv(

|

||||

"https://www.epa.gov/sites/default/files/2014-10/nla2007_trophic_conditionestimate_20091123.csv"

|

||||

) |>

|

||||

select(SITE_ID, visit = VISIT_NO, tp = PTL, tn = NTL, chla = CHLA) |>

|

||||

left_join(visitdf, by = c("SITE_ID", "visit")) |>

|

||||

select(-year, -visit) |>

|

||||

group_by(SITE_ID, date) |>

|

||||

summarize(

|

||||

tp = mean(tp, na.rm = TRUE),

|

||||

tn = mean(tn, na.rm = TRUE),

|

||||

chla = mean(chla, na.rm = TRUE)

|

||||

)

|

||||

|

||||

|

||||

phytodf <- readr::read_csv(

|

||||

"https://www.epa.gov/sites/default/files/2014-10/nla2007_phytoplankton_softalgaecount_20091023.csv"

|

||||

) |>

|

||||

select(

|

||||

SITE_ID,

|

||||

date = DATEPHYT,

|

||||

depth = SAMPLE_DEPTH,

|

||||

phyta = DIVISION,

|

||||

genus = GENUS,

|

||||

species = SPECIES,

|

||||

tax = TAXANAME,

|

||||

abund = ABUND

|

||||

) |>

|

||||

mutate(phyta = gsub(" .*$", "", phyta)) |>

|

||||

filter(!is.na(genus)) |>

|

||||

group_by(SITE_ID, date, depth, phyta, genus) |>

|

||||

summarize(abund = sum(abund, na.rm = TRUE)) |>

|

||||

nest(phytodf = -c(SITE_ID, date))

|

||||

|

||||

phytodf$phytodf[[1]]

|

||||

|

||||

|

||||

envdf <- waterchemdf |>

|

||||

filter(depth < 2) |>

|

||||

select(-depth) |>

|

||||

group_by(SITE_ID, date) |>

|

||||

summarise_all(~ mean(., na.rm = TRUE)) |>

|

||||

ungroup() |>

|

||||

left_join(sddf, by = c("SITE_ID", "date")) |>

|

||||

left_join(trophicdf, by = c("SITE_ID", "date"))

|

||||

|

||||

nla <- envdf |>

|

||||

left_join(phytodf, by = c("SITE_ID", "date")) |>

|

||||

left_join(sitedf, by = "SITE_ID") |>

|

||||

filter(!purrr::map_lgl(phytodf, is.null)) |>

|

||||

mutate(

|

||||

cyanophyta = purrr::map(

|

||||

phytodf,

|

||||

\(x) {

|

||||

x |>

|

||||

dplyr::filter(phyta == "Cyanophyta") |>

|

||||

summarize(cyanophyta = sum(abund, na.rm = TRUE))

|

||||

}

|

||||

)

|

||||

) |>

|

||||

unnest(cyanophyta) |>

|

||||

select(-phyta) |>

|

||||

mutate(clear_to_bottom = ifelse(is.na(clear_to_bottom), TRUE, FALSE))

|

||||

|

||||

# library(rmdify)

|

||||

# library(dwfun)

|

||||

# dwfun::init()

|

||||

```

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

@@ -1,166 +0,0 @@

|

||||

---

|

||||

title: "大数据分析工具"

|

||||

subtitle: 《区域水环境污染数据分析实践》<br>Data analysis practice of regional water environment pollution

|

||||

author: 苏命、王为东<br>中国科学院大学资源与环境学院<br>中国科学院生态环境研究中心

|

||||

date: today

|

||||

lang: zh

|

||||

format:

|

||||

revealjs:

|

||||

theme: dark

|

||||

slide-number: true

|

||||

chalkboard:

|

||||

buttons: true

|

||||

preview-links: auto

|

||||

lang: zh

|

||||

toc: true

|

||||

toc-depth: 1

|

||||

toc-title: 大纲

|

||||

logo: ./_extensions/inst/img/ucaslogo.png

|

||||

css: ./_extensions/inst/css/revealjs.css

|

||||

pointer:

|

||||

key: "p"

|

||||

color: "#32cd32"

|

||||

pointerSize: 18

|

||||

revealjs-plugins:

|

||||

- pointer

|

||||

filters:

|

||||

- d2

|

||||

---

|

||||

|

||||

```{r}

|

||||

#| echo: false

|

||||

knitr::opts_chunk$set(echo = TRUE)

|

||||

source("../../coding/_common.R")

|

||||

library(nycflights13)

|

||||

library(tidyverse)

|

||||

|

||||

```

|

||||

|

||||

## 匹配数字

|

||||

|

||||

### 匹配数字:

|

||||

|

||||

- \d:匹配任意数字字符。

|

||||

- \d+:匹配一个或多个数字字符。

|

||||

- [0-9]: 匹配数字

|

||||

|

||||

### 匹配字母:

|

||||

|

||||

- \w:匹配任意字母、数字或下划线字符。

|

||||

- \w+:匹配一个或多个字母、数字或下划线字符。

|

||||

|

||||

## 匹配数字

|

||||

|

||||

### 匹配空白字符:

|

||||

|

||||

- \s:匹配任意空白字符,包括空格、制表符、换行符等。

|

||||

- \s+:匹配一个或多个空白字符。

|

||||

|

||||

### 匹配特定字符:

|

||||

|

||||

- [abc]:匹配字符 a、b 或 c 中的任意一个。

|

||||

- [a-z]:匹配任意小写字母。

|

||||

- [A-Z]:匹配任意大写字母。

|

||||

- [0-9]:匹配任意数字。

|

||||

|

||||

## 匹配数字

|

||||

|

||||

### 匹配重复次数:

|

||||

|

||||

- {n}:匹配前一个字符恰好 n 次。

|

||||

- {n,}:匹配前一个字符至少 n 次。

|

||||

- {n,m}:匹配前一个字符至少 n 次,但不超过 m 次。

|

||||

|

||||

### 匹配边界:

|

||||

|

||||

- ^:匹配字符串的开头。

|

||||

- $:匹配字符串的结尾。

|

||||

|

||||

## 匹配数字

|

||||

|

||||

### 匹配特殊字符:

|

||||

|

||||

- \:转义特殊字符,使其按字面意义匹配。

|

||||

- .:匹配任意单个字符。

|

||||

- |:表示“或”关系,匹配两个或多个表达式之一。

|

||||

|

||||

#### 匹配次数:

|

||||

|

||||

- *:匹配前一个字符零次或多次。

|

||||

- +:匹配前一个字符一次或多次。

|

||||

- ?:匹配前一个字符零次或一次。

|

||||

|

||||

## 匹配数字

|

||||

|

||||

### 分组和捕获:

|

||||

|

||||

- ():将一系列模式组合成一个单元,可与特殊字符一起使用。

|

||||

|

||||

### 预定义字符集:

|

||||

|

||||

- \d:任意数字,相当于 [0-9]。

|

||||

- \w:任意字母、数字或下划线字符,相当于 [a-zA-Z0-9_]。

|

||||

- \s:任意空白字符,相当于 [ \t\n\r\f\v]。

|

||||

|

||||

|

||||

|

||||

## 实例

|

||||

|

||||

```{r}

|

||||

library(babynames)

|

||||

(x <- c("apple", "apppple", "abc123def"))

|

||||

x[str_detect(x, "[0-9]")]

|

||||

x[str_detect(x, "abc[0-9]+")]

|

||||

x[str_detect(x, "pp")]

|

||||

x[str_detect(x, "p{4}")]

|

||||

x[str_detect(x, "p{4}")]

|

||||

x[str_detect("apple", "ap*")]

|

||||

x[str_detect("apple", "app*")]

|

||||

x[str_detect("apple", "a..le")]

|

||||

```

|

||||

|

||||

## 练习

|

||||

|

||||

|

||||

找出`babyname`中名字含有ar的行

|

||||

|

||||

```{r}

|

||||

#| echo: false

|

||||

babynames |>

|

||||

filter(str_detect(name, "ar"))

|

||||

```

|

||||

|

||||

## 练习

|

||||

|

||||

|

||||

找出`babyname`中名字含有ar或者以ry结尾的行。

|

||||

|

||||

```{r}

|

||||

#| echo: false

|

||||

babynames |>

|

||||

filter(str_detect(name, "ar"))

|

||||

```

|

||||

|

||||

|

||||

|

||||

|

||||

## GNU/Linux服务器

|

||||

|

||||

- `ssh`, `scp`

|

||||

- `bash`

|

||||

- grep

|

||||

- sed

|

||||

- awk

|

||||

- find

|

||||

- xargs

|

||||

- `Editor`

|

||||

- `Virtual Studio Code`

|

||||

- `Vim`

|

||||

- `Emacs`

|

||||

|

||||

|

||||

|

||||

## 欢迎讨论!{.center}

|

||||

|

||||

|

||||

`r rmdify::slideend(wechat = FALSE, type = "public", tel = FALSE, thislink = "https://drc.drwater.net/course/public/RWEP/PUB/SD/")`

|

||||

517

SD/5.2_空间分析/index.qmd

Normal file

517

SD/5.2_空间分析/index.qmd

Normal file

@@ -0,0 +1,517 @@

|

||||

---

|

||||

title: "空间分析"

|

||||

subtitle: 《区域水环境污染数据分析实践》<br>Data analysis practice of regional water environment pollution

|

||||

author: 苏命、王为东<br>中国科学院大学资源与环境学院<br>中国科学院生态环境研究中心

|

||||

date: today

|

||||

lang: zh

|

||||

format:

|

||||

revealjs:

|

||||

theme: dark

|

||||

slide-number: true

|

||||

chalkboard:

|

||||

buttons: true

|

||||

preview-links: auto

|

||||

lang: zh

|

||||

toc: true

|

||||

toc-depth: 1

|

||||

toc-title: 大纲

|

||||

logo: ./_extensions/inst/img/ucaslogo.png

|

||||

css: ./_extensions/inst/css/revealjs.css

|

||||

pointer:

|

||||

key: "p"

|

||||

color: "#32cd32"

|

||||

pointerSize: 18

|

||||

revealjs-plugins:

|

||||

- pointer

|

||||

filters:

|

||||

- d2

|

||||

knitr:

|

||||

opts_chunk:

|

||||

dev: "svg"

|

||||

retina: 3

|

||||

execute:

|

||||

freeze: auto

|

||||

cache: true

|

||||

echo: true

|

||||

fig-width: 5

|

||||

fig-height: 6

|

||||

---

|

||||

|

||||

|

||||

## 目录

|

||||

1. 空间数据基础概念

|

||||

2. Simple Features (SF) 核心规范

|

||||

3. SFEXT扩展功能实战

|

||||

4. 空间关系与几何操作

|

||||

5. 空间统计分析方法

|

||||

6. 综合实战案例

|

||||

|

||||

|

||||

|

||||

## 1. 空间数据基础概念

|

||||

|

||||

### 空间数据类型体系

|

||||

```r

|

||||

library(sf)

|

||||

# 创建示例几何类型

|

||||

st_point(c(0, 0)) # 点

|

||||

st_linestring(rbind(c(0, 0), c(1, 1))) # 线

|

||||

st_polygon(list(rbind(c(0, 0), c(1, 0), c(1, 1), c(0, 0)))) # 面

|

||||

```

|

||||

|

||||

### 坐标参考系(CRS)

|

||||

```r

|

||||

# 常用坐标系定义

|

||||

wgs84 <- st_crs(4326) # 经纬度坐标

|

||||

utm50n <- st_crs(32650) # UTM 50N投影

|

||||

cat(st_crs(wgs84)$proj4string)

|

||||

```

|

||||

|

||||

|

||||

|

||||

## 2. Simple Features (SF) 核心规范

|

||||

|

||||

### 创建SF对象

|

||||

```r

|

||||

# 从数据框转换

|

||||

df <- data.frame(

|

||||

city = c("Beijing", "Shanghai"),

|

||||

pop = c(2171, 2424),

|

||||

geometry = st_sfc(

|

||||

st_point(c(116.4, 39.9)),

|

||||

st_point(c(121.5, 31.2))

|

||||

)

|

||||

)

|

||||

sf_df <- st_as_sf(df, crs = 4326)

|

||||

```

|

||||

|

||||

### 数据IO操作

|

||||

```r

|

||||

# 写入/读取空间文件

|

||||

st_write(sf_df, "cities.shp")

|

||||

new_sf <- st_read("cities.shp")

|

||||

|

||||

# 从内置数据集加载

|

||||

nc <- st_read(system.file("shape/nc.shp", package = "sf"))

|

||||

```

|

||||

|

||||

|

||||

|

||||

## 3. SFEXT扩展功能实战

|

||||

|

||||

### 高级几何生成

|

||||

```r

|

||||

remotes::install_github("r-spatial/sfext")

|

||||

library(sfext)

|

||||

|

||||

# 生成渔网网格

|

||||

grid <- st_regular_grid(

|

||||

xmin = 0,

|

||||

ymin = 0,

|

||||

xmax = 100,

|

||||

ymax = 100,

|

||||

cell_size = 10

|

||||

)

|

||||

plot(grid)

|

||||

```

|

||||

|

||||

### 空间索引加速

|

||||

```r

|

||||

# 创建空间索引

|

||||

system.time(

|

||||

without_idx <- st_intersects(nc, nc)

|

||||

)

|

||||

|

||||

system.time(

|

||||

with_idx <- st_intersects(st_make_valid(nc), sparse = TRUE)

|

||||

)

|

||||

```

|

||||

|

||||

|

||||

|

||||

## 4. 空间关系与操作

|

||||

|

||||

### 拓扑关系判断

|

||||

```r

|

||||

p1 <- st_point(c(0, 0))

|

||||

p2 <- st_point(c(1, 1))

|

||||

st_distance(p1, p2) # 距离计算

|

||||

|

||||

poly <- st_polygon(list(rbind(c(0, 0), c(1, 0), c(1, 1), c(0, 0))))

|

||||

st_contains(poly, p1) # 包含关系

|

||||

```

|

||||

|

||||

### 几何变换操作

|

||||

```r

|

||||

# 缓冲区分析

|

||||

buf <- st_buffer(nc[1, ], dist = 0.1)

|

||||

plot(buf)

|

||||

plot(nc[1, ]$geometry, add = TRUE, col = 'red')

|

||||

|

||||

# 凸包计算

|

||||

convex <- st_convex_hull(nc)

|

||||

plot(convex)

|

||||

```

|

||||

|

||||

|

||||

|

||||

## 5. 空间统计分析方法

|

||||

|

||||

### 点模式分析

|

||||

```r

|

||||

library(spatstat)

|

||||

# 创建点模式对象

|

||||

ppp <- ppp(x = runif(100), y = runif(100), window = owin(c(0, 1), c(0, 1)))

|

||||

|

||||

# K函数分析

|

||||

K <- Kest(ppp)

|

||||

plot(K, main = "Ripley's K函数")

|

||||

```

|

||||

|

||||

### 空间自相关检验

|

||||

```r

|

||||

library(spdep)

|

||||

# Moran's I检验

|

||||

nb <- poly2nb(nc)

|

||||

lw <- nb2listw(nb)

|

||||

moran.test(nc$BIR74, lw)

|

||||

```

|

||||

|

||||

|

||||

|

||||

## 6. 综合实战案例

|

||||

|

||||

### 城市公园服务区分析

|

||||

```r

|

||||

library(osmdata)

|

||||

library(sfnetworks)

|

||||

|

||||

# 获取OSM数据

|

||||

parks <- opq("New York") %>%

|

||||

add_osm_feature("leisure", "park") %>%

|

||||

osmdata_sf()

|

||||

|

||||

roads <- opq("New York") %>%

|

||||

add_osm_feature("highway") %>%

|

||||

osmdata_sf()

|

||||

|

||||

# 构建网络分析

|

||||

net <- as_sfnetwork(roads$osm_lines) %>%

|

||||

st_transform(32618)

|

||||

|

||||

# 计算服务范围(示例)

|

||||

service_area <- st_network_blend(net, parks$osm_polygons) %>%

|

||||

st_buffer(500) # 500米服务区

|

||||

```

|

||||

|

||||

|

||||

|

||||

## 可视化增强技巧

|

||||

|

||||

### 分层绘图

|

||||

|

||||

```r

|

||||

library(ggplot2)

|

||||

ggplot() +

|

||||

geom_sf(data = nc, aes(fill = BIR74)) +

|

||||

geom_sf(data = service_area, alpha = 0.3) +

|

||||

scale_fill_viridis_c() +

|

||||

theme_minimal()

|

||||

```

|

||||

|

||||

### 交互可视化

|

||||

|

||||

```r

|

||||

library(mapview)

|

||||

mapview(nc, zcol = "BIR74", burst = TRUE) +

|

||||

mapview(service_area, alpha.regions = 0.2)

|

||||

```

|

||||

|

||||

# 实践案例

|

||||

|

||||

|

||||

## 中国地图

|

||||

|

||||

::: panel-tabset

|

||||

### Code

|

||||

|

||||

|

||||

```{r}

|

||||

#| echo: true

|

||||

#| eval: false

|

||||

#| out-width: 50%

|

||||

library(tidyverse)

|

||||

library(sf)

|

||||

|

||||

# 更稳定的预处理数据源(含港澳台)

|

||||

# china <- st_read("https://geo.datav.aliyun.com/areas_v3/bound/100000.json")

|

||||

|

||||

china <- st_read("./中华人民共和国.json", quiet = TRUE)

|

||||

|

||||

library(ggplot2)

|

||||

|

||||

ggplot(china) +

|

||||

geom_sf(fill = "#F6F6F6", color = "#666666", size = 0.3) +

|

||||

theme_void() +

|

||||

labs(title = "China")

|

||||

|

||||

# 添加模拟经济数据(实际应用可连接统计年鉴)

|

||||

set.seed(123)

|

||||

china$gdp <- runif(nrow(china), 100, 1000) # 模拟GDP数据(亿元)

|

||||

|

||||

china |>

|

||||

ggplot() +

|

||||

geom_sf(aes(fill = gdp), color = "gray90", size = 0.2) +

|

||||

scale_fill_gradientn(

|

||||

colours = c("#2c7bb6", "#abd9e9", "#ffffbf", "#fdae61", "#d7191c"),

|

||||

name = NULL

|

||||

) +

|

||||

theme_void() +

|

||||

theme(legend.position = c(0.85, 0.3))

|

||||

```

|

||||

|

||||

### Output

|

||||

|

||||

```{r}

|

||||

#| echo: false

|

||||

#| out-width: 80%

|

||||

#| fig-width: 6

|

||||

#| fig-height: 3

|

||||

library(tidyverse)

|

||||

library(sf)

|

||||

|

||||

# 更稳定的预处理数据源(含港澳台)

|

||||

# china <- st_read("https://geo.datav.aliyun.com/areas_v3/bound/100000.json")

|

||||

|

||||

china <- st_read("./中华人民共和国.json", quiet = TRUE)

|

||||

|

||||

library(ggplot2)

|

||||

|

||||

ggplot(china) +

|

||||

geom_sf(fill = "#F6F6F6", color = "#666666", size = 0.3) +

|

||||

theme_void() +

|

||||

labs(title = "China")

|

||||

|

||||

# 添加模拟经济数据(实际应用可连接统计年鉴)

|

||||

set.seed(123)

|

||||

china$gdp <- runif(nrow(china), 100, 1000) # 模拟GDP数据(亿元)

|

||||

|

||||

china |>

|

||||

ggplot() +

|

||||

geom_sf(aes(fill = gdp), color = "gray90", size = 0.2) +

|

||||

scale_fill_gradientn(

|

||||

colours = c("#2c7bb6", "#abd9e9", "#ffffbf", "#fdae61", "#d7191c"),

|

||||

name = NULL

|

||||

) +

|

||||

theme_void() +

|

||||

theme(legend.position = c(0.85, 0.3))

|

||||

```

|

||||

|

||||

:::

|

||||

|

||||

|

||||

## Kriging

|

||||

|

||||

::: panel-tabset

|

||||

### Code

|

||||

|

||||

```{r}

|

||||

#| echo: true

|

||||

#| eval: false

|

||||

#| out-width: 50%

|

||||

require(sf)

|

||||

require(sp)

|

||||

require(gstat)

|

||||

require(raster)

|

||||

require(ggplot2)

|

||||

# 读取太湖边界

|

||||

lake_boundary <- st_read("../../data/taihu.shp") |>

|

||||

sf::st_make_valid()

|

||||

main_water <- st_difference(

|

||||

lake_boundary[lake_boundary$Name == "boundary", ],

|

||||

st_union(lake_boundary[lake_boundary$Name != "boundary", ])

|

||||

)

|

||||

|

||||

get_kriging <- function(indf, Yvarname, grid = NULL, boundsf = main_water) {

|

||||

insf <- indf |>

|

||||

sfext::df_to_sf(crs = 4326, coords = c("long", "lat")) |>

|

||||

dplyr::select(-c("long", "lat")) |>

|

||||

dplyr::rename(Y = tidyselect::all_of(Yvarname))

|

||||

if (is.null(grid)) {

|

||||

# 1. 将sf边界转为terra格式

|

||||

v <- terra::vect(boundsf)

|

||||

# 2. 创建基础网格

|

||||

base_grid <- terra::rast(v, resolution = 0.002) # 基础低分辨率

|

||||

# 3. 创建高分辨率区域(例如边界附近)

|

||||

buffer_zone <- buffer(v, width = 0.005) # 边界附近创建缓冲区

|

||||

hi_res_grid <- terra::rast(buffer_zone, resolution = 0.001)

|

||||

# 4. 合并网格

|

||||

final_grid <- merge(base_grid, hi_res_grid)

|

||||

# 5. 转为点并裁剪

|

||||

grid <- terra::as.points(final_grid) |>

|

||||

sf::st_as_sf() |>

|

||||

sf::st_filter(main_water)

|

||||

}

|

||||

insp <- sf::as_Spatial(insf)

|

||||

fit <- automap::autofitVariogram(Y ~ 1, insp)

|

||||

# 克里金插值

|

||||

m <- gstat::gstat(

|

||||

formula = Y ~ 1,

|

||||

data = insp,

|

||||

model = fit$var_model

|

||||

)

|

||||

predsf <- predict(m, grid) |>

|

||||

sf::st_as_sf()

|

||||

outsf <- predsf |>

|

||||

st_coordinates() |>

|

||||

as.data.frame() |>

|

||||

cbind(pred = predsf$var1.pred)

|

||||

return(outsf)

|

||||

}

|

||||

|

||||

|

||||

wqdf <- readxl::read_xlsx("../../data/wqdata.xlsx")

|

||||

|

||||

if (FALSE) {

|

||||

wqsf <- wqdf |>

|

||||

nest(datedf = -date) |>

|

||||

dplyr::mutate(

|

||||

krigingsf = purrr::map(datedf, \(x) {

|

||||

get_kriging(x, "conc", boundsf = main_water)

|

||||

})

|

||||

)

|

||||

saveRDS(wqsf, "./wqsf.rds")

|

||||

}

|

||||

wqsf <- readRDS("./wqsf.rds")

|

||||

|

||||

wqsf |>

|

||||

unnest(krigingsf) |>

|

||||

ggplot(aes(X, Y)) +

|

||||

geom_contour_filled(aes(z = pred), bins = 20) +

|

||||

geom_sf(

|

||||

data = main_water,

|

||||

aes(x = NULL, y = NULL),

|

||||

fill = NA,

|

||||

colour = "black",

|

||||

linewidth = 1

|

||||

) +

|

||||

# scale_fill_gradientn(colors = hcl.colors(100, "RdYlBu")) + # 设置颜色渐变

|

||||

# ggsci::scale_fill_aaas() +

|

||||

coord_sf() +

|

||||

theme_void()

|

||||

```

|

||||

|

||||

### Output

|

||||

|

||||

```{r}

|

||||

#| echo: false

|

||||

#| out-width: 80%

|

||||

#| message: false

|

||||

#| fig-width: 6

|

||||

#| fig-height: 3

|

||||

require(sf)

|

||||

require(sp)

|

||||

require(gstat)

|

||||

require(raster)

|

||||

require(ggplot2)

|

||||

# 读取太湖边界

|

||||

lake_boundary <- st_read("../../data/taihu.shp", quiet = TRUE) |>

|

||||

sf::st_make_valid()

|

||||

main_water <- st_difference(

|

||||

lake_boundary[lake_boundary$Name == "boundary", ],

|

||||

st_union(lake_boundary[lake_boundary$Name != "boundary", ])

|

||||

)

|

||||

|

||||

get_kriging <- function(indf, Yvarname, grid = NULL, boundsf = main_water) {

|

||||

insf <- indf |>

|

||||

sfext::df_to_sf(crs = 4326, coords = c("long", "lat")) |>

|

||||

dplyr::select(-c("long", "lat")) |>

|

||||

dplyr::rename(Y = tidyselect::all_of(Yvarname))

|

||||

if (is.null(grid)) {

|

||||

# 1. 将sf边界转为terra格式

|

||||

v <- terra::vect(boundsf)

|

||||

# 2. 创建基础网格

|

||||

base_grid <- terra::rast(v, resolution = 0.002) # 基础低分辨率

|

||||

# 3. 创建高分辨率区域(例如边界附近)

|

||||

buffer_zone <- buffer(v, width = 0.005) # 边界附近创建缓冲区

|

||||

hi_res_grid <- terra::rast(buffer_zone, resolution = 0.001)

|

||||

# 4. 合并网格

|

||||

final_grid <- merge(base_grid, hi_res_grid)

|

||||

# 5. 转为点并裁剪

|

||||

grid <- terra::as.points(final_grid) |>

|

||||

sf::st_as_sf() |>

|

||||

sf::st_filter(main_water)

|

||||

}

|

||||

insp <- sf::as_Spatial(insf)

|

||||

fit <- automap::autofitVariogram(Y ~ 1, insp)

|

||||

# 克里金插值

|

||||

m <- gstat::gstat(

|

||||

formula = Y ~ 1,

|

||||

data = insp,

|

||||

model = fit$var_model

|

||||

)

|

||||

predsf <- predict(m, grid) |>

|

||||

sf::st_as_sf()

|

||||

outsf <- predsf |>

|

||||

st_coordinates() |>

|

||||

as.data.frame() |>

|

||||

cbind(pred = predsf$var1.pred)

|

||||

return(outsf)

|

||||

}

|

||||

|

||||

|

||||

wqdf <- readxl::read_xlsx("../../data/wqdata.xlsx")

|

||||

|

||||

if (FALSE) {

|

||||

wqsf <- wqdf |>

|

||||

nest(datedf = -date) |>

|

||||

dplyr::mutate(

|

||||

krigingsf = purrr::map(datedf, \(x) {

|

||||

get_kriging(x, "conc", boundsf = main_water)

|

||||

})

|

||||

)

|

||||

saveRDS(wqsf, "./wqsf.rds")

|

||||

}

|

||||

wqsf <- readRDS("./wqsf.rds")

|

||||

|

||||

wqsf |>

|

||||

unnest(krigingsf) |>

|

||||

ggplot(aes(X, Y)) +

|

||||

geom_contour_filled(aes(z = pred), bins = 20) +

|

||||

geom_sf(

|

||||

data = main_water,

|

||||

aes(x = NULL, y = NULL),

|

||||

fill = NA,

|

||||

colour = "black",

|

||||

linewidth = 1

|

||||

) +

|

||||

# scale_fill_gradientn(colors = hcl.colors(100, "RdYlBu")) + # 设置颜色渐变

|

||||

# ggsci::scale_fill_aaas() +

|

||||

coord_sf() +

|

||||

theme_void()

|

||||

```

|

||||

|

||||

:::

|

||||

|

||||

## 学习资源

|

||||

|

||||

1. SF官方文档:https://r-spatial.github.io/sf/

|

||||

2. 《Geocomputation with R》在线书

|

||||

3. R-spatial教程:https://github.com/r-spatial/workshops

|

||||

```

|

||||

|

||||

### 使用说明:

|

||||

1. 需要R 4.0+环境

|

||||

2. 推荐安装依赖:

|

||||

```r

|

||||

install.packages(c("sf", "spatstat", "spdep", "osmdata", "sfnetworks", "ggplot2", "mapview"))

|

||||

remotes::install_github("r-spatial/sfext")

|

||||

```

|

||||

3. 实际案例数据可替换为本地shapefile

|

||||

4. 交互可视化部分需要浏览器支持

|

||||

|

||||

|

||||

## 欢迎讨论!{.center}

|

||||

|

||||

|

||||

`r rmdify::slideend(wechat = FALSE, type = "public", tel = FALSE, thislink = "https://drc.drwater.net/course/public/RWEP/PUB/SD/")`

|

||||

BIN

SD/5.2_空间分析/wqsf.rds

Normal file

BIN

SD/5.2_空间分析/wqsf.rds

Normal file

Binary file not shown.

1

SD/5.2_空间分析/中华人民共和国.json

Normal file

1

SD/5.2_空间分析/中华人民共和国.json

Normal file

File diff suppressed because one or more lines are too long

1

SD/5.3_大数据分析工具/_extensions

Symbolic link

1

SD/5.3_大数据分析工具/_extensions

Symbolic link

@@ -0,0 +1 @@

|

||||

../../_extensions

|

||||

523

SD/5.3_大数据分析工具/index.qmd

Normal file

523

SD/5.3_大数据分析工具/index.qmd

Normal file

@@ -0,0 +1,523 @@

|

||||

---

|

||||

title: "大数据分析工具"

|

||||

subtitle: 《区域水环境污染数据分析实践》<br>Data analysis practice of regional water environment pollution

|

||||

author: 苏命、王为东<br>中国科学院大学资源与环境学院<br>中国科学院生态环境研究中心

|

||||

date: today

|

||||

lang: zh

|

||||

format:

|

||||

revealjs:

|

||||

theme: dark

|

||||

slide-number: true

|

||||

chalkboard:

|

||||

buttons: true

|

||||

preview-links: auto

|

||||

lang: zh

|

||||

toc: true

|

||||

toc-depth: 1

|

||||

toc-title: 大纲

|

||||

logo: ./_extensions/inst/img/ucaslogo.png

|

||||

css: ./_extensions/inst/css/revealjs.css

|

||||

pointer:

|

||||

key: "p"

|

||||

color: "#32cd32"

|

||||

pointerSize: 18

|

||||

revealjs-plugins:

|

||||

- pointer

|

||||

filters:

|

||||

- d2

|

||||

---

|

||||

|

||||

```{r}

|

||||

#| echo: false

|

||||

knitr::opts_chunk$set(echo = TRUE)

|

||||

source("../../coding/_common.R")

|

||||

library(nycflights13)

|

||||

library(tidyverse)

|

||||

```

|

||||

|

||||

# 正则表达式

|

||||

|

||||

## 匹配数字

|

||||

|

||||

### 匹配数字:

|

||||

|

||||

- \d:匹配任意数字字符。

|

||||

- \d+:匹配一个或多个数字字符。

|

||||

- [0-9]: 匹配数字

|

||||

|

||||

### 匹配字母:

|

||||

|

||||

- \w:匹配任意字母、数字或下划线字符。

|

||||

- \w+:匹配一个或多个字母、数字或下划线字符。

|

||||

|

||||

## 匹配数字

|

||||

|

||||

### 匹配空白字符:

|

||||

|

||||

- \s:匹配任意空白字符,包括空格、制表符、换行符等。

|

||||

- \s+:匹配一个或多个空白字符。

|

||||

|

||||

### 匹配特定字符:

|

||||

|

||||

- [abc]:匹配字符 a、b 或 c 中的任意一个。

|

||||

- [a-z]:匹配任意小写字母。

|

||||

- [A-Z]:匹配任意大写字母。

|

||||

- [0-9]:匹配任意数字。

|

||||

|

||||

## 匹配数字

|

||||

|

||||

### 匹配重复次数:

|

||||

|

||||

- {n}:匹配前一个字符恰好 n 次。

|

||||

- {n,}:匹配前一个字符至少 n 次。

|

||||

- {n,m}:匹配前一个字符至少 n 次,但不超过 m 次。

|

||||

|

||||

### 匹配边界:

|

||||

|

||||

- ^:匹配字符串的开头。

|

||||

- $:匹配字符串的结尾。

|

||||

|

||||

## 匹配数字

|

||||

|

||||

### 匹配特殊字符:

|

||||

|

||||

- \:转义特殊字符,使其按字面意义匹配。

|

||||

- .:匹配任意单个字符。

|

||||

- |:表示“或”关系,匹配两个或多个表达式之一。

|

||||

|

||||

#### 匹配次数:

|

||||

|

||||

- *:匹配前一个字符零次或多次。

|

||||

- +:匹配前一个字符一次或多次。

|

||||

- ?:匹配前一个字符零次或一次。

|

||||

|

||||

## 匹配数字

|

||||

|

||||

### 分组和捕获:

|

||||

|

||||

- ():将一系列模式组合成一个单元,可与特殊字符一起使用。

|

||||

|

||||

### 预定义字符集:

|

||||

|

||||

- \d:任意数字,相当于 [0-9]。

|

||||

- \w:任意字母、数字或下划线字符,相当于 [a-zA-Z0-9_]。

|

||||

- \s:任意空白字符,相当于 [ \t\n\r\f\v]。

|

||||

|

||||

|

||||

|

||||

## 实例

|

||||

|

||||

```{r}

|

||||

library(babynames)

|

||||

(x <- c("apple", "apppple", "abc123def"))

|

||||

x[str_detect(x, "[0-9]")]

|

||||

x[str_detect(x, "abc[0-9]+")]

|

||||

x[str_detect(x, "pp")]

|

||||

x[str_detect(x, "p{4}")]

|

||||

x[str_detect(x, "p{4}")]

|

||||

x[str_detect("apple", "ap*")]

|

||||

x[str_detect("apple", "app*")]

|

||||

x[str_detect("apple", "a..le")]

|

||||

```

|

||||

|

||||

## 练习

|

||||

|

||||

|

||||

找出`babyname`中名字含有ar的行

|

||||

|

||||

```{r}

|

||||

#| echo: false

|

||||

babynames |>

|

||||

filter(str_detect(name, "ar"))

|

||||

```

|

||||

|

||||

## 练习

|

||||

|

||||

|

||||

找出`babyname`中名字含有ar或者以ry结尾的行。

|

||||

|

||||

```{r}

|

||||

#| echo: false

|

||||

babynames |>

|

||||

filter(str_detect(name, "ar"))

|

||||

```

|

||||

|

||||

|

||||

|

||||

# Linux基础知识与开发工具

|

||||

|

||||

|

||||

## SSH - 安全远程连接

|

||||

|

||||

```bash

|

||||

# 连接到远程服务器

|

||||

ssh username@remote.server.com

|

||||

|

||||

# 使用特定端口连接

|

||||

ssh -p 2222 username@remote.server.com

|

||||

|

||||

# 执行远程命令

|

||||

ssh username@server "ls -l /tmp"

|

||||

```

|

||||

|

||||

**案例**:

|

||||

- 远程管理云服务器

|

||||

- 自动化脚本执行

|

||||

|

||||

|

||||

## Windows下的SSH工具 - PuTTY

|

||||

|

||||

|

||||

|

||||

```bash

|

||||

# 主要功能:

|

||||

1. 保存会话配置(IP/端口/认证信息)

|

||||

2. 支持SSH/Telnet/Serial连接

|

||||

3. 公钥认证(配合Pageant使用)

|

||||

4. 端口转发(SSH隧道)

|

||||

```

|

||||

|

||||

**案例**:

|

||||

- 连接Linux服务器进行管理

|

||||

- 建立SSH隧道访问内网资源

|

||||

|

||||

|

||||

|

||||

## SCP - 安全文件传输

|

||||

|

||||

```bash

|

||||

# 复制本地文件到远程

|

||||

scp file.txt username@remote:/path/to/dest

|

||||

|

||||

# 从远程复制到本地

|

||||

scp username@remote:/path/file.txt .

|

||||

|

||||

# 递归复制目录

|

||||

scp -r dir/ username@remote:/path/

|

||||

```

|

||||

|

||||

**案例**:

|

||||

- 部署网站文件到生产环境

|

||||

- 备份远程日志文件

|

||||

|

||||

|

||||

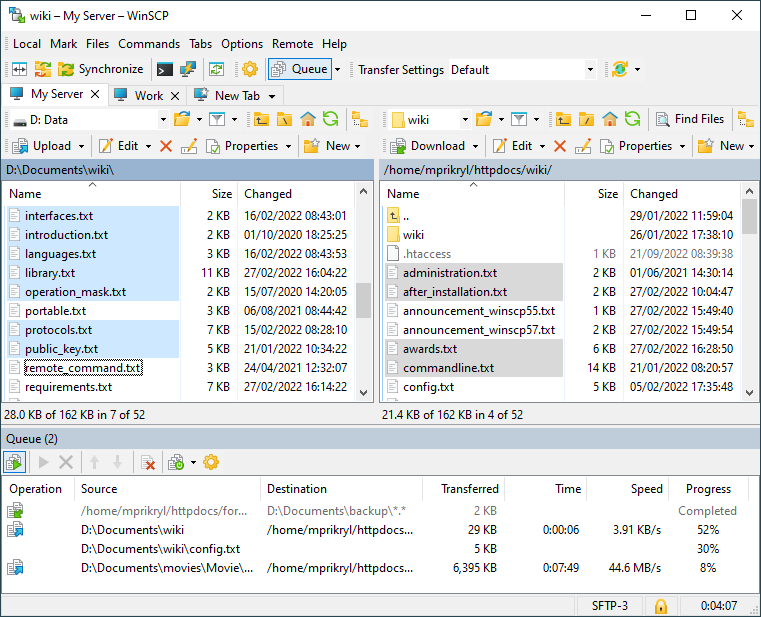

## Windows下的SCP工具 - WinSCP

|

||||

|

||||

|

||||

|

||||

```bash

|

||||

# 主要特性:

|

||||

- 图形化SFTP/SCP客户端

|

||||

- 与PuTTY集成

|

||||

- 支持拖拽操作

|

||||

- 可保存常用连接

|

||||

- 批处理脚本功能

|

||||

```

|

||||

|

||||

**案例**:

|

||||

- 可视化管理服务器文件

|

||||

- 本地与服务器间同步代码

|

||||

|

||||

|

||||

## Windows替代bash的工具

|

||||

|

||||

**1. Git Bash**

|

||||

```bash

|

||||

# 包含常用Linux命令

|

||||

ls, grep, ssh, scp, awk等

|

||||

```

|

||||

|

||||

**2. WSL (Windows Subsystem for Linux)**

|

||||

```bash

|

||||

# 完整Linux环境

|

||||

sudo apt install python3

|

||||

```

|

||||

|

||||

**3. Cygwin**

|

||||

```bash

|

||||

# POSIX兼容环境

|

||||

cygwin.com/setup-x86_64.exe

|

||||

```

|

||||

|

||||

|

||||

## Windows终端工具推荐

|

||||

|

||||

| 工具 | 特点 | 适用场景 |

|

||||

|------|------|----------|

|

||||

| **Windows Terminal** | 多标签/色彩支持 | 日常开发 |

|

||||

| **MobaXterm** | 内置X11/插件 | 远程开发 |

|

||||

| **Tabby** | 跨平台/主题丰富 | 多平台用户 |

|

||||

| **ConEmu** | 高度可定制 | 高级用户 |

|

||||

|

||||

|

||||

|

||||

## 开发工具跨平台方案

|

||||

|

||||

**最佳实践**:

|

||||

1. 代码编辑器统一使用VS Code(全平台支持)

|

||||

- 配合Remote-SSH插件

|

||||

2. 数据库工具使用DBeaver/DataGrip

|

||||

3. 版本控制使用Git GUI(GitKraken/Fork)

|

||||

|

||||

```bash

|

||||

# 保持环境一致的建议:

|

||||

- 使用WSL2开发环境

|

||||

- 配置相同的.ssh/config文件

|

||||

- 共享相同的IDE配置

|

||||

```

|

||||

|

||||

|

||||

## grep - 文本搜索

|

||||

|

||||

```bash

|

||||

# 基本搜索

|

||||

grep "error" logfile.txt

|

||||

|

||||

# 递归搜索目录

|

||||

grep -r "function" src/

|

||||

|

||||

# 显示行号

|

||||

grep -n "TODO" *.py

|

||||

|

||||

# 反向匹配

|

||||

grep -v "success" results.log

|

||||

```

|

||||

|

||||

**案例**:

|

||||

- 在日志中查找错误信息

|

||||

- 分析代码库中的特定模式

|

||||

|

||||

|

||||

## sed - 流编辑器

|

||||

|

||||

```bash

|

||||

# 替换文本

|

||||

sed 's/old/new/g' file.txt

|

||||

|

||||

# 删除空行

|

||||

sed '/^$/d' file.txt

|

||||

|

||||

# 原地编辑文件

|

||||

sed -i 's/python/python3/g' script.sh

|

||||

```

|

||||

|

||||

**案例**:

|

||||

- 批量重命名文件中的字符串

|

||||

- 清理数据文件中的不规范格式

|

||||

|

||||

|

||||

## awk - 文本处理

|

||||

|

||||

```bash

|

||||

# 打印特定列

|

||||

awk '{print $1,$3}' data.csv

|

||||

|

||||

# 条件过滤

|

||||

awk '$3 > 100 {print $0}' sales.txt

|

||||

|

||||

# 使用分隔符

|

||||

awk -F',' '{print $2}' users.csv

|

||||

```

|

||||

|

||||

**案例**:

|

||||

- 分析服务器日志统计状态码

|

||||

- 处理CSV格式数据

|

||||

|

||||

|

||||

## find & xargs - 文件查找处理

|

||||

|

||||

```bash

|

||||

# 查找并删除

|

||||

find . -name "*.tmp" -delete

|

||||

|

||||

# 查找并处理

|

||||

find /var/log -name "*.log" | xargs ls -lh

|

||||

|

||||

# 复杂组合

|

||||

find src/ -type f -name "*.js" | xargs grep -l "deprecated"

|

||||

```

|

||||

|

||||

**案例**:

|

||||

- 清理旧临时文件

|

||||

- 批量处理项目文件

|

||||

|

||||

|

||||

## 代码编辑器

|

||||

|

||||

- **VS Code**:现代轻量级编辑器

|

||||

- 丰富的插件生态

|

||||

- 内置Git支持

|

||||

- **Vim**:终端高效编辑器

|

||||

```bash

|

||||

vim file.txt

|

||||

```

|

||||

- **RStudio**:R语言集成环境

|

||||

- **JupyterLab**:交互式笔记本环境

|

||||

|

||||

|

||||

|

||||

## Git版本控制

|

||||

|

||||

```bash

|

||||

# 基本工作流

|

||||

git clone https://repo.url

|

||||

git add .

|

||||

git commit -m "message"

|

||||

git push

|

||||

|

||||

# 分支管理

|

||||

git checkout -b new-feature

|

||||

git merge main

|

||||

```

|

||||

|

||||

**案例**:

|

||||

- 团队协作开发

|

||||

- 版本回滚与问题追踪

|

||||

|

||||

|

||||

## MySQL数据库

|

||||

|

||||

```sql

|

||||

-- 基本查询

|

||||

SELECT * FROM users WHERE age > 18;

|

||||

|

||||

-- 创建表

|

||||

CREATE TABLE products (

|

||||

id INT PRIMARY KEY,

|

||||

name VARCHAR(100)

|

||||

);

|

||||

|

||||

-- 数据操作

|

||||

INSERT INTO products VALUES (1, 'Laptop');

|

||||

UPDATE products SET price=999 WHERE id=1;

|

||||

```

|

||||

|

||||

**案例**:

|

||||

- Web应用数据存储

|

||||

- 数据分析与报表生成

|

||||

|

||||

|

||||

|

||||

# 公开数据获取

|

||||

|

||||

## 案例:全国气象数据

|

||||

|

||||

```{bash}

|

||||

#| eval: false

|

||||

#!/bin/bash

|

||||

logfn="${HOME}/service/log/nationalairquality/nationalairquality.log"

|

||||

workdirfn="${HOME}/service/nationalairquality/"

|

||||

mkdir -p "$(dirname "${logfn}")"

|

||||

touch "${logfn}"

|

||||

|

||||

echo "$(date '+%Y-%m-%d %H:%M:%S'): 下载大气质量数据" >>"${logfn}"

|

||||

|

||||

declare -a citynames

|

||||

|

||||

citynames=(北京市 石家庄市 秦皇岛市)

|

||||

|

||||

jsonfn="${workdirfn}/nationalairquality_$(date '+%Y%d%m%H').json"

|

||||

jsonfn="nationalairquality_$(date '+%Y%d%m%H').json"

|

||||

# [[ -f "${jsonfn}" ]] && rm "${jsonfn}"

|

||||

echo "[" >"${jsonfn}"

|

||||

|

||||

for cityname in "${citynames[@]}"; do

|

||||

echo "下载${cityname}空气质量数据..." >>"${logfn}"

|

||||

curl "https://air.cnemc.cn:18007/CityData/GetAQIDataPublishLive?cityName=${cityname}" \

|

||||

-H 'Accept: */*' \

|

||||